What Is a Load Balancing Server? | Parallels explains

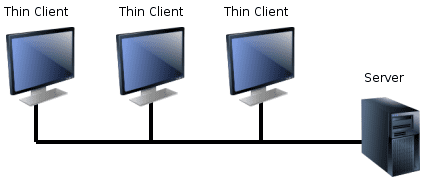

A load balancing server is an appliance (hardware or virtual machine) or software application that’s usually deployed between end-user devices and a data center (server farm or cluster). Its primary function is to detect available resources (nodes) in the server farm, receive traffic coming in from clients, and distribute the traffic to those available nodes.

A load balancing server is an appliance (hardware or virtual machine) or software application that’s usually deployed between end-user devices and a data center (server farm or cluster). Its primary function is to detect available resources (nodes) in the server farm, receive traffic coming in from clients, and distribute the traffic to those available nodes.

How traffic is distributed depends on the method—or load balancing algorithm—implemented by the load balancing server. There are several load balancing algorithms, but some of the most common are round-robin, least connection, and random.

What Is the Purpose of Load Balancing?

The purpose of load balancing is to regulate the traffic on a network and prevent servers from getting overloaded. If you deploy a single server against a growing number of clients, that server can eventually get overloaded. One way to prevent that from happening is to add more servers. However, simply adding more servers is only half the solution.

Who regulates the network traffic to which server? If it’s going to be directed to just one server (or only a few servers in the farm), then that server could still get overloaded. You can improve this setup by placing a load balancer in front of the server farm so it can direct traffic and distribute it efficiently.

Why Is It Important to Businesses and Organizations?

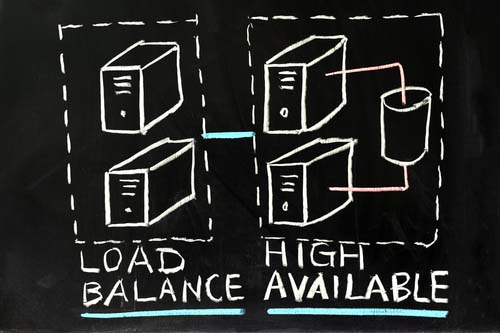

When an organization offers services to its customers and other end-users, it has to ensure the high availability of those services, often on a 24/7 basis. For example, if a company is offering virtual application delivery via Remote Desktop Session Host (RDSH) to remote desktop (RD) clients, it has to ensure that each client can always connect to a healthy (not loaded) RDSH server.

This can be done through a load balancing server—which, traditionally, would be a third-party solution that has to be installed, configured, and deployed separately. A load balancing server offers many benefits to an organization, including scalability, reliability, flexibility, and security.

1. Scalability – Load balancing allows IT managers to attach as many servers as they want to the existing infrastructure without worrying about reconfiguring each hardware and software. The result is a robust ecosystem that expands depending on the organizational requirements.

2. Reliability – In case there is a problem with one server, load balancing algorithms automatically routes the incoming traffic to any available server. This means you have an uninterrupted service 24/7/365.

3. Flexibility – During maintenance, IT managers can direct all the traffic to one server and place the load balancer in active or passive mode. As such, the organization has a unique staggered maintenance system that does not affect the site’s uptime.

4. Security – Load balancing algorithms can distribute loads to other servers without revealing their IP addresses. By employing a single public IP address through which outsiders see your servers, hackers cannot take advantage of vulnerabilities in the setup.

Algorithms Used by a Load Balancing Server

Your demands will determine the load balancing algorithm to choose, as different load balancing algorithms offer various benefits:

- Round-Robin – Requests are spread across the set of servers in a round-robin fashion.

- Least Connections – A fresh request is delivered to the server that has the fewest active client connections.

- Least Time – The server with the quickest response time and the fewest active connections is chosen by the least time option, which sends requests to that server.

- Hash – Distributes requests using a key you specify, such as the client IP address or the request URL, using the hash algorithm.

- IP Hash – To identify which server gets a request, the client’s IP address is utilized.

- Random with Two Options – Chooses one of two servers at random, then uses the Least Connections algorithm to transmit the request to that server.

Load Balancing with Parallels RAS

When it comes to RDSH and VDI load balancing, Parallels® Remote Application Server (RAS) makes it easy. Parallels RAS comes with load balancing capabilities out of the box. It monitors each host in the server farm for CPU usage, memory usage, and the number of concurrent sessions, and then redirects incoming connections to the host with the least load.

Try the 30-day evaluation period of Parallels RAS to experience quick and easy load balancing yourself.